IBM quietly launched its DDR5 upgrade for Power servers. I know what you’re thinking. DDR5? That’s yesterday’s news. But IBM is doing something very different with DDR than other vendors do. Look at how much bandwidth they can put on a server:

4S 4th Gen Xeon (192 “Sapphire Rapids” cores): 1.2 TB/s

2S 4th Gen EPYC (192 Zen 4 cores): 922 GB/s

4S IBM E1050 (192 SMT-4 cores): 4.8 TB/s

4.8 TB/s sounds impossible. Four times the next-fastest x86 server? To put that in perspective, that’s as much memory bandwidth as a pair of NVIDIA’s blazing fast H100 GPUs. If you know CFD, you know that we’ve been unable to get the most out of a CPU for years due to inadequate bandwidth, which has driven the transition to GPUs, but as of the launch of DDR5 for Power10, there’s now a CPU server on the market that can actually deliver data to the cores as fast as they can chew through it.

Let’s understand why this is, why IBM’s design philosophy puts it in a different place than either x86 or NVIDIA. What we will see is that, like all designs, there are tradeoffs.

x86 world is designed first and foremost for flexibility. AMD and Intel both offer a dizzying array of SKUs, and importantly, they don’t make their own servers. EPYC and Xeon servers appear in a wide variety of form factors and from a huge range of vendors. This keeps costs relatively low and ensures that no matter what you need, there’s an x86 server for you. This means, among other things, that x86 CPUs are built for compatibility with commodity parts, like commodity DDR memory DIMMs.

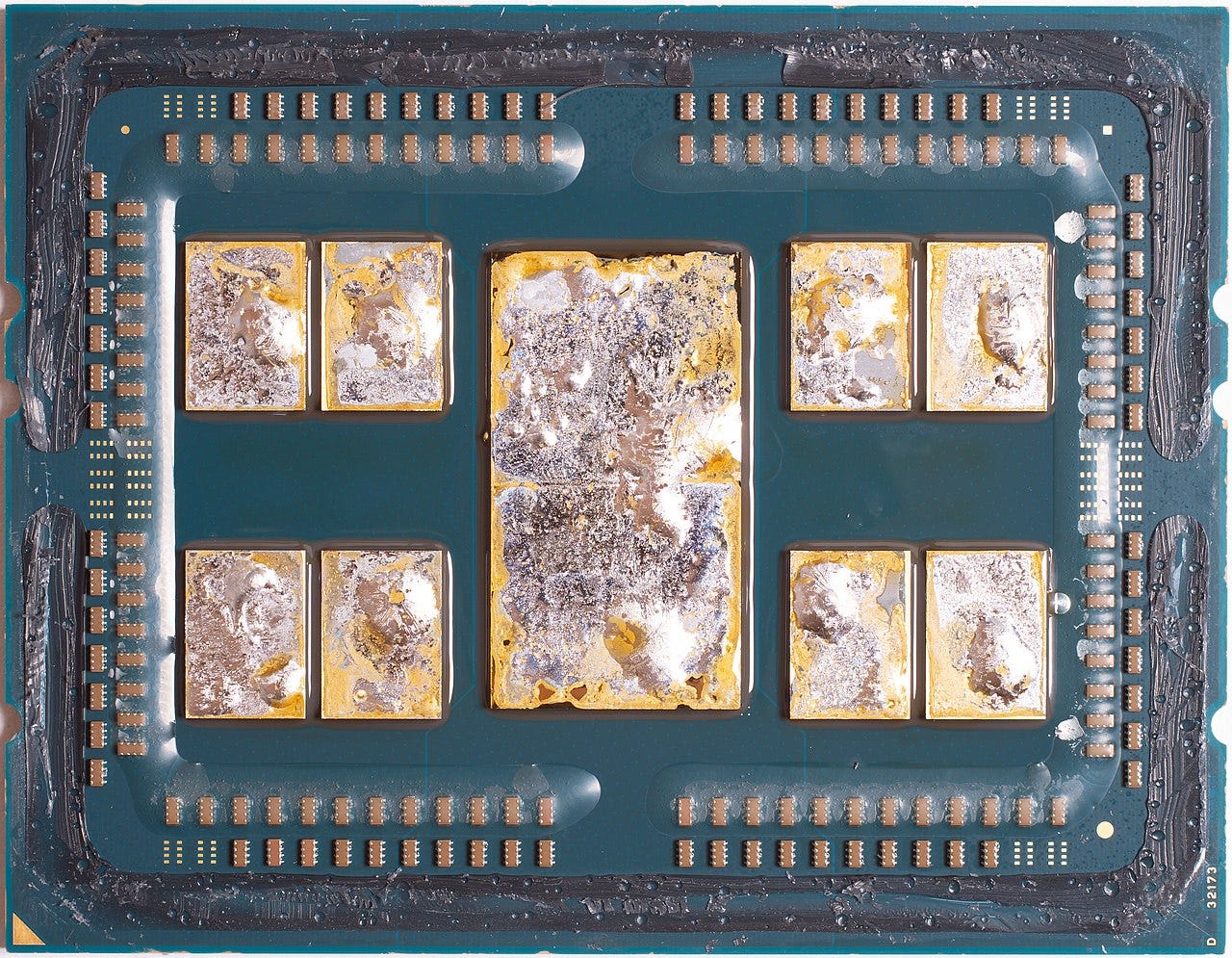

But, as a software developer, it’s easy to forget that a CPU is a physical machine. The DDR memory interface is big and chunky, and it takes a lot of silicon. This photo of a delidded EPYC 7702 (source) shows the massive I/O die in the middle - that’s where the memory and PCIe devices connect.

4th gen EPYC( formerly “Genoa”) isn’t much different, because DDR5 isn’t much different from DDR4. Currently, 4th gen EPYC is the biggest CPU on the block, with 12 64-bit memory channels. The entire CPU has a 768-bit bus, which really isn’t much compared to GPUs. An H100 has a 5120-bit bus. Really.

Server GPUs take a radically different approach to achieve their massive bandwidth. They use a relatively new type of memory called High-Bandwith Memory (HBM). HBM relies on 3D stacking and complex packaging to greatly increase the memory bandwidth. Due to the way the chips are packaged, adding another die to the stack adds bandwidth, so the 4-Hi stacks in NVIDIA’s H100 GPU each have a 1024-bit bus. This, however, is a very expensive way to package memory, and moreover, you can’t fit a lot of memory on a card this way. That’s why a CPU server can host around 100x the memory that a GPU can.

So what did IBM do differently? They designed their own memory interface, called OMI, which is far, far more economical on space than DDR. This allows massive memory bandwidth, but the problem is there’s no such thing as OMI memory. Consequently IBM servers use custom DIMMs that have an embedded memory controller. With the new DDR5 technology, each DIMM hosts two memory channels. On IBM’s larger servers, each socket hosts 16 DIMMs, for a whopping 32 memory channels per CPU! That is how IBM is able to deliver the bandwidth comparable to GPUs without compromising memory capacity.

Every design does have tradeoffs. Commodity DDR5 DIMMs are cheap. Custom memory DIMMs are…not cheap. And, while GPUs have a very low ceiling on how much memory they can host, Power10 has a pretty high floor. Right now, server GPUs max out at 128 GB on AMD’s MI300 Instinct…whereas, if you want the massive bandwidth IBM DDR5 offers, you start at 1 TB per socket (64 GB x 16 DIMMs) and go up from there. There is no low-memory option. If you cut the DIMMs in half, you cut the memory channels in half, too.

IBM’s been cooking this up for a long time. Each generation since Power9, we’ve seen progressive rethinking of how to interface DDR with a CPU to boost throughput while maintaining capacity. With the new DDR5 memory technology, the gloves are off, and IBM is demonstrating clear leadership in this technology space.

If you’d like to learn more about what IBM Power with DDR5 is capable of, contact us at info@tachos-hpc.com.

"If you know CFD, you know that we’ve been unable to get the most out of a CPU for years due to inadequate bandwidth, which has driven the transition to GPUs, but as of the launch of DDR5 for Power10, there’s now a CPU server on the market that can actually deliver data to the cores as fast as they can chew through it."

Interesting analogy regarding Computational Fluid Dynamics, and pipelines of code/data (I think queueing theory applies, and is mathematically related). But the above sums up the problem: if you can't get your data and code into the CPU fast enough, you're CPU idles, and you're wasting money on the CPU where an investment in memory bandwidth to the CPU would be better.

Brings to mind comparisons between PCs and mainframes, where mainframe I/O bandwidth far exceeded PC I/O bandwidth, relative to its CPU processing power. And where IBM implemented/offloaded a lot of the I/O processing in the I/O channels to minimize CPU work for I/O. As a result, I think you should have used "decades" rather than "years" for this problem, because the problem has existed since the introduction of the PC in 1981.